Here’s some highlights if you don’t feel like reading:

- Be very careful – your resulting config may be passing flood-based routing protocol packets, spanning tree BPDU’s, and any other broadcast / layer two things occurring on your network.

- FortiGate tunnel interfaces doing VXLAN encapsulation cannot offload IPSec to hardware NPU, so throughput may hit an upper limit even if you don’t have MTU problems.

- FortiGate VXLAN encapsulation functionality cannot involve aggregate interfaces (e.g. LACP/LAG/MLAG/MC-LAG/port-channel or whatever your preferred vendor calls them)

This post will be a work in progress while working through a data center migration without re-addressing systems, and without service interruption to public-facing services. The servers are running on internet-routable addresses, are dual-stack IPv4/IPv6, and both locations have full table BGP peering using unique ASNs. There is not layer two connectivity between locations, and the cost of deploying such a P2P link would be cost prohibitive due to the requirement for an annual contract, diverse paths, etc. So…. enter VXLAN. However, I don’t want the traffic traversing the internet in the clear, so now it’s VXLAN + VPN.

The idea is to migrate systems live, and if internet traffic arrives for a system that has already been moved, it traverses the VXLAN to reach that system. We’ll focus on one VLAN at a time so that as little traffic as possible has to cross the VXLAN. We’ll also further focus on moving systems grouped by the most specific BGP prefix sizes that can be safely advertised so that as each prefix completes migration, we advertise that more specific prefix from the new data center so that most of its traffic begins coming to the new location, taking it off the VXLAN. For example:

- VLAN 10

- IPv4: 192.0.8.0/22 (192.0.8.0-192.0.11.255)

- IPv6: 2001:db8:0:10::/64

- VLAN 20

- IPv4: 192.0.20.0/22 (192.0.20.0-192.0.23.255)

- IPv6: 2001:db8:0:20::/64

- Old Data Center – BGP ASN 1

- Advertising 192.0.8.0/22 and 2001:db8::/32

- New Data Center – BGP ASN 2

- No advertisements yet

Given the above, we could start by migrating systems on VLAN 10 in the address range 192.0.8.1-192.0.8.254. As those systems move, internet traffic arriving at the old data center (ASN 1) would cross the VXLAN to reach them. Upon completion of that first group, we begin advertising 192.0.8.0/24 from the new data center (ASN 2). That is a more specific route, so a reasonable amount of traffic destined for these migrated systems will begin arriving at that data center and not have to traverse the VXLAN. We then move on to the next /24. Once all of VLAN 10 has been migrated, we can stop advertising the /22 from the old data center, and switch the new data center from its specifics back to an aggregated /22. On the IPv6 side, unfortunately since that VLAN 10 subnet assignment is already the most specific you can safely advertise, all you can really do is begin advertising the /64 out of the new data center when you’ve passed the half way point of your migrations, or, if specific targets see the most traffic, move them first and then begin advertising the /64 from the new data center.

Okay, getting back to the technical side. There was existing Fortinet FortiGate equipment at both locations so it made sense to see if it could be leveraged to accomplish this migration. There’s also Arista equipment present, which offers a dramatically more robust VXLAN+EVPN solution, especially for those with much larger networks or a heavy amount of east/west traffic, but I’m going to try on the FortiGate side first as the easier path.

Fortinet’s docs on VXLAN are pretty lacking, and I could not find much in the way of public content. You can find bits and pieces about doing a single IP subnet over VPN, or (one) VLAN in VXLAN without VPN and no explanation of how to add more, but nothing at all about multiple VLANs in VXLAN across VPN.

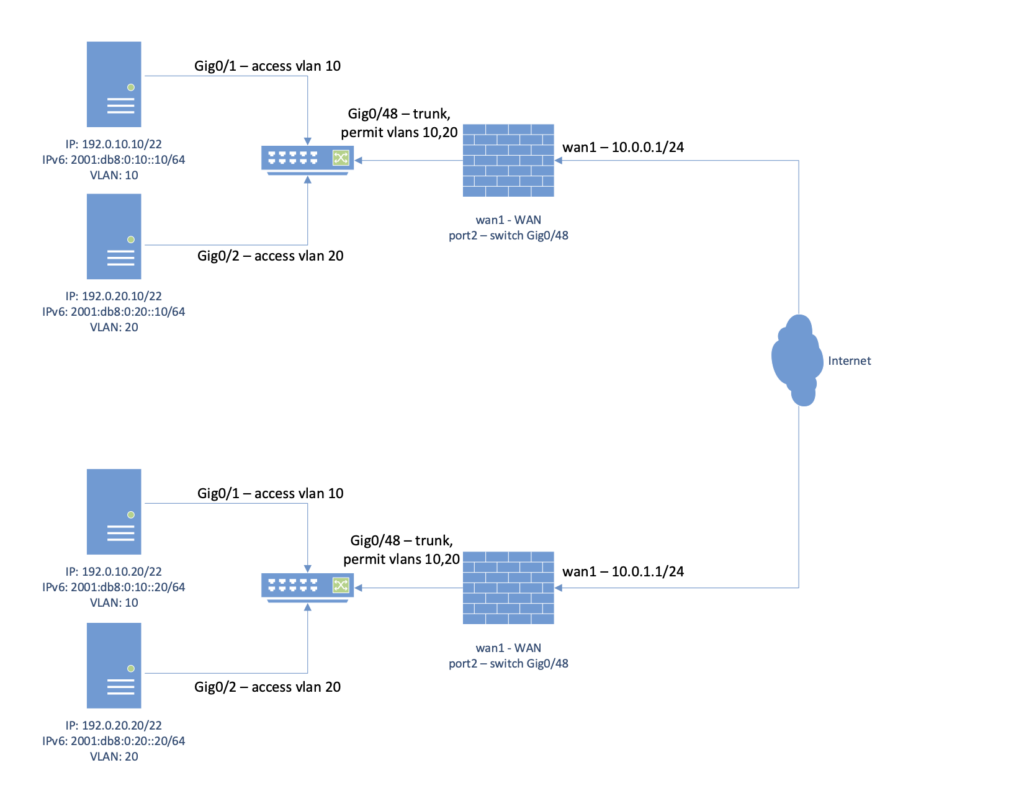

Scenario #1 – VLAN trunk to FortiGate then VXLAN-over-VPN

The following was performed using FortiOS 6.2.4 between a 100E and 60E.

config system interface

edit "LAG"

set vdom "root"

set type aggregate

set member "wan1" "wan2"

set lacp-speed fast

next

end

config system switch-interface

edit "vxlan-switch"

set vdom "root"

set member "LAG"

next

endCode language: JavaScript (javascript)The set-member statement will not see the aggregate. This is unfortunate, because it means that you will be constrained to the bandwidth of one physical port, and it of course makes for a number of failure scenarios. If you’re using this article to build a more permanent solution, you would ideally use an HA pair of FortiGates, and in addition, set HA port monitoring on the ports which handle your VXLAN traffic. That way, if one does go down, a failover to the other FortiGate can occur. If you need more throughput than one physical port can provide, you’d need to do the VXLAN encapsulation on something other than a FortiGate, because its VXLAN functionality is dependent on a software switch. You may ultimately find you have to do this regardless, because FortiGates cannot do hardware offload of IPSec when the tunnel is set to do VXLAN encapsulation. (Reference)

Note #2 – all the documentation I could find about VXLAN in VPN, whether Fortinet’s or third party pages, have a tunnel interface set with an encapsulation-address of ipv4, which then requires you to specify next hop targets. I could not find any combination of settings to get that working with a VLANs in VXLAN scenario, even if I assigned IP addresses to the tunnel interfaces on each side. I found that setting the encapsulation address to ‘ike’ got it working.

Note #3 – this specific configuration does not allow for VLAN translation between data centers, as it’s passing raw packets inclusive of the 802.1q VLAN ID header. If you cannot use the same VLAN ID’s on both sides, you will need to be using switches that are of a sufficient capability to perform VLAN translation when the packets come out of the trunk from the Fortigate on each side. For example, lower end Cisco switches cannot do this, but more featureful ones can, and you’d do it at the trunk interface level by way of the “switchport vlan mapping” command(s).

So here we go, VPN first, and you will not be able to convert an existing IPSec VPN interface to VXLAN encapsulation, so you’ll have to tear down every aspect of your existing config if you’re trying to convert an existing VPN to carry VXLAN; thanks Fortinet. Obviously tune the tunnel parameters to your own preference (encryption, DH, timers, etc).

Firewall #1 at old data center:

conf vpn ipsec phase1-int

edit "vpn-to-DC2"

set interface "wan1"

set keylife 28800

set peertype any

set net-device enable

set proposal aes256-sha256

set dpd on-idle

set dhgrp 14

set encapsulation vxlan

set encapsulation-address ike

set remote-gw 10.0.1.1

set psksecret SUPERSECRET

next

end

config vpn ipsec phase2-interface

edit "vpn-to-DC2p2"

set phase1name "vpn-tpa-vxlan"

set proposal aes256-sha256

set dhgrp 14

set auto-negotiate enable

set keylifeseconds 14400

next

end

config system switch-interface

edit "vpn-vxlan-sw"

set vdom "root"

set member "port2" "vpn-to-DC2"

next

endCode language: JavaScript (javascript)Firewall #2 at new data center:

conf vpn ipsec phase1-int

edit "vpn-to-DC1"

set interface "wan1"

set keylife 28800

set peertype any

set net-device enable

set proposal aes256-sha256

set dpd on-idle

set dhgrp 14

set encapsulation vxlan

set encapsulation-address ike

set remote-gw 10.0.0.1

set psksecret SUPERSECRET

next

end

config vpn ipsec phase2-interface

edit "vpn-to-DC1p2"

set phase1name "vpn-tpa-vxlan"

set proposal aes256-sha256

set dhgrp 14

set auto-negotiate enable

set keylifeseconds 14400

next

end

config system switch-interface

edit "vpn-vxlan-sw"

set vdom "root"

set member "port2" "vpn-to-DC1"

next

endCode language: JavaScript (javascript)Switch config at both data centers – Cisco-specific but easy enough to customize if you use something else. It is VERY important that you do not just set the port mode to trunk, as this config will pass all traffic it receives, and you do not want to be passing traffic from VLANs not intended to cross the VXLAN. So, set the allowed VLANs:

vlan 10

!

vlan 20

!

interface GigabitEthernet0/1

switchport access vlan 10

switchport mode access

spanning-tree portfast

spanning-tree bpduguard enable

!

interface GigabitEthernet0/2

switchport access vlan 20

switchport mode access

spanning-tree portfast

spanning-tree bpduguard enable

!

interface GigabitEthernet0/48

switchport trunk allowed vlan 10,20

switchport mode trunk

!Code language: PHP (php)At this point, the VPN came up but I was not seeing traffic traverse it. No, it is not a rules issue, because FortiGate software switches now default to an implicit allow, where packets are free to traverse them without rules being created. You can override this behavior at the “config system switch” level by setting the specific switch to explicit rule mode. Using ‘diag sniffer packet VPN-to-DC2 4’ on the old data center FortiGate revealed it was receiving 802.1q-tagged packets. I ended up having to make the following modifications to both the trunk ports heading to the switches, and the phase1-interface devices: set vlanforward enable

conf system interface

edit "vpn-to-DC1"

set vlanforward enable

next

edit "port2"

set vlanforward enable

next

end

conf system interface

edit "vpn-to-DC2"

set vlanforward enable

next

edit "port2"

set vlanforward enable

next

endCode language: JavaScript (javascript)This change was required because the default behavior of FortiOS versions 5.0.10+, 5.2.2+, 5.4.0+, and newer, is to not forward VLAN-tagged packets if there is not an explicit VLAN interface defined. Reverting this setting allowed me to start seeing ARP, spanning tree, and similar broadcast traffic. I fired up my test devices on both VLANs, DHCP worked, IPv6 router advertisements were received, they had access as if they were on the remote network.

Performance is fairly piss poor under speed tests; no doubt I’m running into MTU issues given the overhead of normal size ethernet frames heading into 54 bytes of VXLAN encapsulation and then varying amounts of AES+SHA IPSec overhead depending on packet size. This could be a difficult problem to solve short of adjusting the MTU on your layer two networks, because path MTU discovery will not work in this scenario since the Fortigate cannot respond with an ICMP packet too large like a router would. If you were in a virtualized data center, or have true layer two between the Fortigate’s, you may be able to run jumbo frames on the ‘WAN’, solving the problem, but if you’re doing this across the real internet, an MTU change would be the only long term reliable option. For a quick migration on a network by network basis, you may be able to tolerate this issue until finished.