I won’t go into intimate detail as it’s all poking around online, but I’ll give a run down of all the stupid things I ran into while trying to go from vCenter 5.5 on Windows 2008 R2 to a 6.5 appliance using the Appliance Migration Assistant and Appliance Installer.

- First issue, SSL certificates. The Migration Assistant began bitching about names and/or CA trust issues. I ultimately needed to use the SSL Certificate Automation Tool, a horrid batch file, to work through this, along with some openssl commands which saved quite a bit of time. Here’s what I ended up doing:

- Grab the SSL Certificate Automation Tool from vmware: https://kb.vmware.com/selfservice/viewdocument.do?cmd=displayKC&docType=kc&externalId=2057340But don’t follow that article yet, it has a bunch of pointless stuff.

- Do yourself a favor and extract it at the root of C:\ with a simple directory name, because you’re going to be typing in and out of it a lot.

- Edit the environment variable file and pre-define all your paths; it’s ten times easier than trying to type them command line.

- Run the tool and generate new cert requests for vCenter, inventory service, web client as well as update manager now that the vCenter 6.5 migration tool includes update manager. I got these tips from this thread https://communities.vmware.com/thread/546223 where mbrkic has some excellent guidance, but I didn’t use his steps of creating the openssl.cnf as the automation tool will do that part for you.

- Next, instead of taking your certs to a CA, sign them yourself by going into each of the SSL Certificate Automation Tool’s requests\* subdirectories which there will be four of, and running:”c:\Program Files\VMware\Infrastructure\Inventory Service\bin\openssl.exe” req -nodes -new -x509 -keyout rui.key -out rui.crt -days 3650 -config csr_openssl.cnf

That will create each of your four certificates.

- After running the above, rename the resulting rui.crt to rui.pem

- Your SSL Certificate Automation Tool ssl-environment.bat file should reference the new certificates by their rui.pem names; make sure to get the paths correct since each of the four will be different.

- Use the SSL Cert Automation Tool to update each of the four certs; it will handle the install and restarting of services.

- You should be good to go now.

- Next issue, assistant is clear, you move on to appliance installer. Well, your VCSA target must be 6.0+ of ESXi. I was running my old vCenter on a free-license 5.5 host and it was just managing a cluster of enterprise plus hosts. I had to down all the VM’s and upgrade that vCenter host to 6.5 and then things were happy, or so I thought….

- Run the appliance installer again, I’m ready to run an attempt that will hopefully make it out of the starting gates. It runs, it gets stuck at “Installing RPM” at 80% and ultimately fails referencing some type of install timeout. This, it turns out, was the result of my trying to deploy the appliance to an ESXi host running a free license. The free licenses are missing relevant features, but the installer doesn’t test for that and warn you, it just wastes a few hours of your time and fails after a while. I found a website with steps to switch back to an eval license, which is fully featured, then you can switch back to your free license afterward:

What the site didn’t make clear was that after removing the license.cfg and doing the rename, you need to get back into the fat client and actually pick the eval license radio button if you had previously been on a free license. So, I flip back to eval, run the installer for the third attempt and we’re past that issue to phase two.

- Finally, my first seemingly good attempt at using the installer / migration tool gets to phase two and dies with “error attempting backup spbm data”. There are hints this may be related to the SSL cert swap out from issue #1, but I couldn’t find anything to definitively point at that, and ultimately gave up and went ahead with an Inventory Service database reset as per VMware kb 2042200:https://kb.vmware.com/selfservice/viewdocument.do?cmd=displayKC&docType=kc&externalId=2042200

Here’s a possible second cause of this with some info:http://notes.doodzzz.net/2015/03/24/vsphere-6-0-home-lab-upgrade-issues-faced/

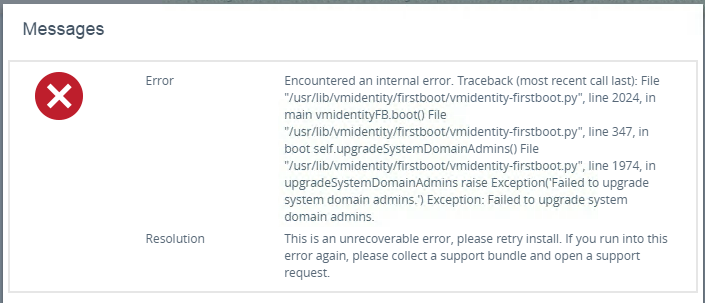

https://communities.vmware.com/thread/505529 - Okay, inventory database is reset, I begin my fourth attempt at this. Think it will work? FUCK NO Nothing VMware produces outside of the hypervisor actually fucking works with any consistency, and when it fucks up, you better have a snapshot because it doesn’t just mildly fuck up, it goes North Korea nuclear style. The latest?

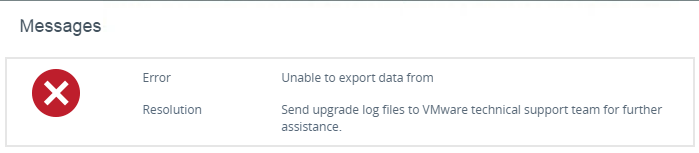

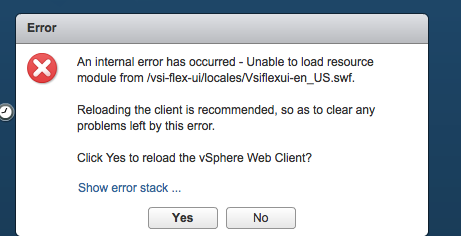

Oh yeah, that’s a great error, who knows wtf that means. Well let’s delete my VCSA because even the installer, on the web interface, acknowledges something went wrong and it must be deleted and begun again. Yep, another three hours down the drain; wash, rinse, repeat. I found this post which references some of the error, and the recommendation was to ensure forward and reverse DNS entries are present for the temporary Windows machine you’re running the appliance installer / migration tool from: https://communities.vmware.com/thread/544755 Huh? FQDN and PTR for a temp machine which supposedly is just moving data? The docs don’t mention that. Well let me spend 30 seconds adding a DNS entry to see if it fixes what just wasted three fucking hours of my time VMware; great job guys.Also, if you’re running on an IPv6-enabled network, make sure to check the post about adding a localhost entry for your ::1 to the appliance before proceeding with step 2.

- Okay, bullshit DNS entries are in place, let’s start attempt number five and in three or four hours I’ll let you know if it worked….Nah:

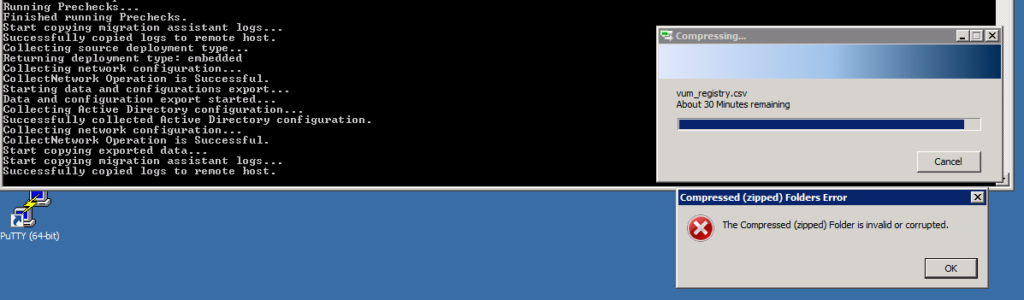

So what are we at, perhaps 12 to 15 wasted hours now. I noticed this on the vCenter 5.5 server console though: I guess we need to investigate update manager as that would seem to indicate it has some relation to this latest failure. Found this page:

I guess we need to investigate update manager as that would seem to indicate it has some relation to this latest failure. Found this page:

https://www.stephenwagner.com/?p=1115

same issues, suggests I need to:- Uninstall update manager

- Ensure I remove the temp files that the previous attempts have created as it will attempt to grab the data from archive each time.

- Reconnect the original server to the AD domain (non-issue in my case, running standalone)

- Start over again LOL Guess I’ll give attempt six a shot in the morning and let you know how it goes.

- Attempt six notes – it’s been sitting at “Starting vmware postgres” for a couple hours now. Not sure if it will finish but found this site telling me what to expect in the mean time:

http://kenumemoto.blogspot.com/2017/03/vcenter-65-upgrade-problem-occurred.html

Woah, it completed! I had to stare at that for few hours but then it moved along.

- Finally, as described in the vmware blog: https://blogs.vmware.com/vsphere/2017/01/vcenter-server-appliance-6-5-migration-walkthrough.html it actually worked. I was able to move off crap MS Windows to VCSA, using far less cpu, far less mem, no more Windows updates and eliminating some license costs.

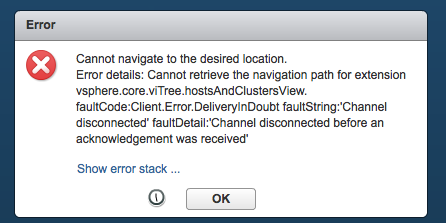

Of course, after all that, now I’m stuck no longer being able to use the reliable and easy thick/fat/C# client. I hate the web interface even more now that I’m forced to use it. Hell, just trying to find a browser that supports Flash properly these days is a challenge; ended up having to install FireFox on my Macs and my PC’s because Chrome is obviously a no-go, and Flash wouldn’t seem to work properly in Safari on Mac. IE/Edge on Win10 is supposed to have it built in but the built in version doesn’t seem compatible with vCenter.

VMware, get the fucking hint, we dont want a broken web interface, get rid of Flash, either make the html5 work properly, or let us use the fat client. Relying on flash means you’re stuck with this bullshit:

This is a great commentary and actually helped me lower my FPM (fucks per minute) while exercising patience on what would normally be easy for most folks. Glad to know this has bit a shit show for many others as well.

I had almost all of the same problems, plus one extra:

We have 4 vCenters, I started with the smallest which only manages a single cluster of 2 hosts that is only semi-production. The hosts are 100% identical DL380 Gen9 servers with identical firmware.

After finally getting the VCSA6.5 migration completed, I setup VUM and upgraded the first host to 6.5 no worries. I then went to vMotion everything to the 6.5 host so I could upgrade the second host and that failed – I can no longer vMotion due to CPU incompatibilities. That is when I noticed the migration “helpfully” turned off Admission Control, EVC, HA, DRS, and pretty much every other cluster-level feature. But now I cannot turn on EVC because it says my 6.5 host doesn’t support the same CPU features as my 5.5 host what the fuck. I wasted hours troubleshooting before I logged a ticket with vmware (thankfully we have a full support agreement) and they acknowledged it was a known issue that has affected only a few people. The fix isn’t ready yet. The workaround? Well “just” power off every VM, edit their advanced configs and power them back on… Is that ALL? Are you fucking SERIOUS VMware? Luckily this wasn’t a production environment. Now I wait for the next patch before considering moving on to upgrade more vCenters.

I had some of the same issues, except add a cryptic error message about SQL. Turns out my 2008R2 vcenter ODBC needs to use the SQL Server Native Client 10.0 for the migration to work. You have to check both the vcenter and vcenterupdatemanager connections. Located in two different locations.

Try the HTML5 version, it works good! Flash is old af

The html5 version sucks as much as the flash version. Half the time you think you’re altering the settings for what the dialog boxes indicate when you really aren’t. Or, you need to manage something they haven’t yet ported to the html5 version, like Update Manager. Vmware has been asleep at the wheel for a few years now.