Got the chance to deploy an XtremIO v3 (v3.0.1 OS) recently, to replace a VNX5500. Both running iSCSI, both handling VMware load exclusively, PowerPath/VE in use on the VMware side for multi-path I/O. Have to say, initial impression is this thing is a beast but does have some drawbacks and/or marketing fluff. I deployed a single brick system, i.e. two controllers and one DAE (disk array enclosure) of 25×800 GB Flash.

First five complaints just to get those out of the way, and perhaps help others with at least one of the items:

1) The components arrive on a pallet with a rack attached, stacked in the order they must go into your rack. This means you are best off removing every single component (battery backup, battery backup, controller 2, DAE, controller 1, blank panel) from the rack, ensuring you keep them in the proper order on the floor, and then load them back into your rack bottom up.

Why is this necessary? Well that’s because EMC’s engineers have no common sense or perhaps real world experience. Each component is a dramatically different length possibly on both sides. For example, the DAE is barely deeper than a third of a rack and you need to connect a bunch of cables to the back of it. The backup batteries (UPS’s) are probably set eight inches back from the front of the rack, but extend to the back. The controllers, which sandwich the DAE from above and below, are full length, ensuring there’s no way to get cables into the DAE once they’re screwed into place.

The second reason is because if you try to rack the components by taking the first off the top, placing it in your rack, and working your way down, it’s nearly impossible to get the rails in place once something is on top of them, and your controller on the bottom is a bitch to install if the DAE is already installed above it because they use dramatically different rails.

What would make far more sense you ask? Clearly labeling all the components as shipped, and putting them on the pallet in reverse order of how they are to be installed. Then you simply pop the first component off the top, and install it at the bottom location of where they’ll all be going in your rack. Pop the next one off the top, install above the last one. I guess I think like a programmer and they think like who knows what.

Now the helpful part. If you use square hole racks like most everyone, EMC equipment of course comes configured for stupid round-hole racks so you’ll find the rails that come with your controllers do not fit correctly. You can try to jam them in there and secure with a screw so they’ll at least not fall out, but that will be less than ideal. What you need to do is remove a screw from the mounting latch portion of the brackets, one on each end, and that will allow you to swivel the mounting latch around from round hole config to square hole config. Put the screw back in to secure it. Now they’ll easily drop into your rack. The other rails, such as the DAE and battery backups, are just generic crap that work for both types of racks, and don’t work well in either rack, so you’ll just have to live with those.

2) Second complaint; XtremIO, just like VNX, has an endless list of default usernames and passwords, and who knows if that list is complete or how many other back doors are possible in your new array. Here’s that list of users/passes: link

3) The management interface still uses Java; wtf?! Although I guess that’s better than the Flash garbage that VMware decided on with the vSphere web interface. And yes, as is the case with all of the other Java bullshit that may be scattered about your enterprise, you’ll probably run into version compatibility issues from one component to the next. For example, good luck trying to have a single management machine that can talk to an EMC CLARiiON, a Cisco UCS install and an EMC XtremIO array.

4) As best I can tell, there’s no way to have iSCSI initiators auto register on the XtremIO side, at least from the perspective of showing up as an available ‘host’ to add to a storage group like you would in the VNX and CX4 days. The reason this is a complaint is because if you’re deploying in a large VMware vCenter cluster, you may have a LOT of vSphere hosts, so you’re going to be doing a LOT of manual adding of initiator names on the XtremeIO side just to let all your hosts connect. I could not find any command line reference to make it scriptable on the XtremIO side to add initiators en masse. If I later find a solution or am corrected on this, I’ll happily replace this complaint with a disclosure that I’m stupid.

On the VNX and CX4 arrays, your initators would show up in the Unisphere interface as unregistered or just ‘hosts’ that are not connected to a storage group. Click them, add to group, done; easy, not a lot of extra work.

5) The fucking price. I admit the thing cranks out the IOPS and the MB/sec, but Jesus they charge an arm and a leg for it. You’d think they would drop the price and destroy the competition rather than rely on their existing customers to stay loyal with what can only be considered a ridiculous price for the hardware. For example, the flash drives my XtremIO came with are the HGST (formerly Hitachi prior to the Western Digital acquisition) model HUSMM8080ASS201; http://www.hgst.com/solid-state-storage/enterprise-ssd/sas-ssd/ultrastar-ssd800mm These drives sell for between $2500 and $3000 street price. There are 25 of them in a single brick. Your first brick also includes two generic PC’s, with two ports each of 10gig, Infiniband and Fibre Channel. On the front side, they have two flash drives and two spinning drives. Wrapping it up, the first brick includes two Eaton 5P power backups. All in all, if we are generous, EMC has put together a bundle of hardware that if I purchased it myself, would probably cost about $90k to $100k USD street price. Then they add another $250k to $300k on top of that for the EMC magic beans.

So again, I get it, it’s from EMC, you trust EMC, your boss trusts EMC, their R&D (or the R&D of the companies they acquire) are pretty good, so you buy EMC instead of some of the other all flash array players, but it’s starting to get a little painful staying loyal when they’re marking up the underlying hardware by a factor of three to four.

So anyway, that’s about all the major complaints. Other than that, setup was a breeze, including enabling drive encryption. Defining LUNs is simple. Mapping hosts to LUNs is simple. Monitoring is simple. Really the whole thing is very easy to navigate around and you’ll have your devices connected to their respective volumes in no time, provided you don’t have a huge vmware cluster and run into that issue described above.

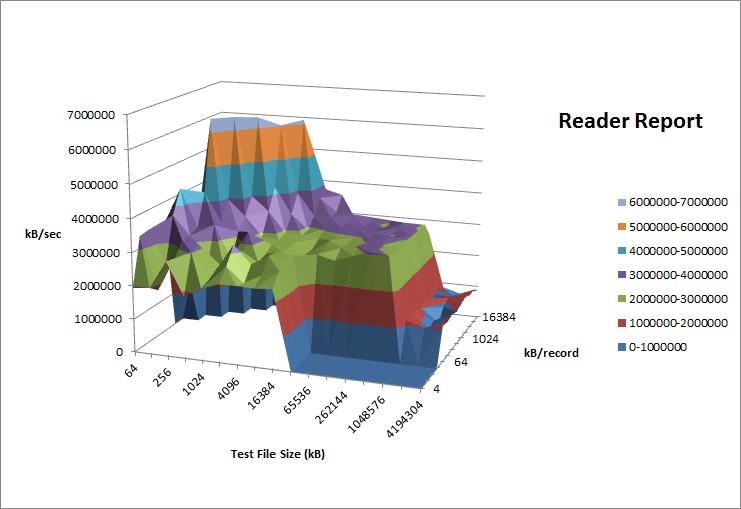

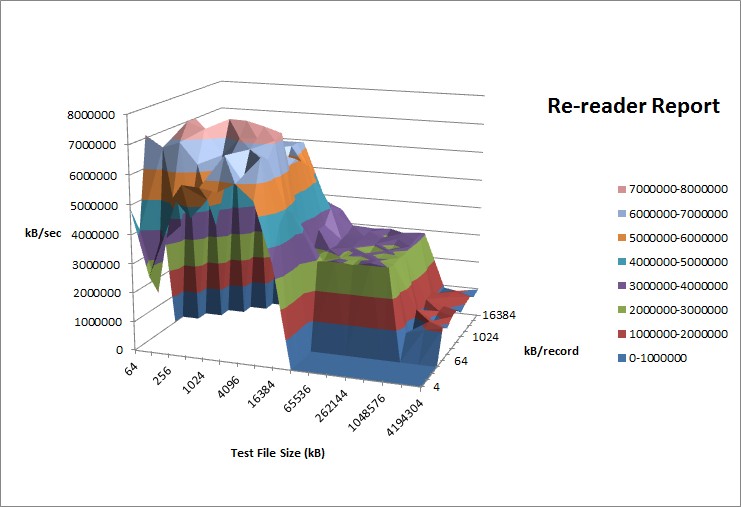

Once up and running, wow. I stuck one VM on a new LUN I created. The connectivity was four paths of 10gig from Cisco UCS to XtremIO via Brocade switches, 9000 byte jumbo frames, no routing, PowerPath/VE multi-path I/O. Guest OS was CentOS 6 x86_64 with VMware pvscsi paravirtualized SCSI controller, latest vmware tools, only 2 GB of memory. I fired up the venerable Bonnie disk benchmarking tool just to do a quick initial run using a 20 GB dataset and saw, from the guest OS, 1000+ MB/sec block writes, and more than 1300 MB/sec block reads, while the vSphere side reported latency remained at <1ms the entire time, typically around 600 microseconds.

Version 1.03e ------Sequential Output------ --Sequential Input- --Random- -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks-- Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP testsrv1 20G 80920 99 1053460 88 432385 39 68528 91 1302352 59 12277 50

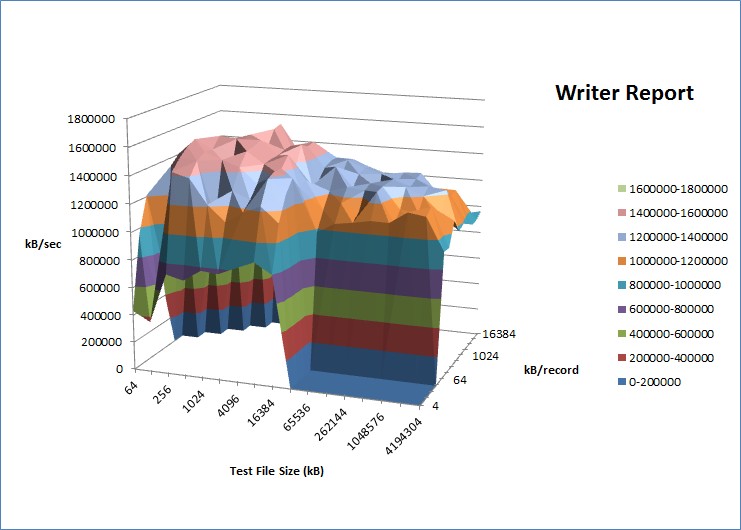

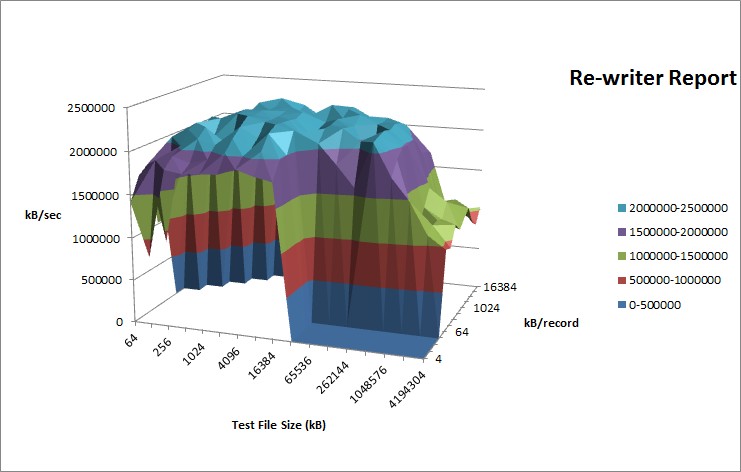

I ran IOzone with flags for just read/write, 4 GB data size. Saw a very comfortable initial write of 1000 to 1400 MB/sec, rewrite double that, reads and re-reads basically wire speed considering the underlying VMware setup includes 40 Gbit/sec in and out of the array.

Here’s the raw report in PDF format: iozone-graph

Now, where there is some marketing fluff is the whole <1ms response time. EMC sales droids will tell you you’ll see <1ms response time pretty much all the time. My understanding is what they really mean is you’ll see that if all you ever do is send 4kb I/O’s to it. In the real world, you’ll see things look more like this on a very regular basis:

Not that that’s too much to complain about since the old spinning media VNX it replaced would average in the 8 to 12ms range most of the time, so it’s certainly far better than that, but the rah rah <1ms thing is a bit of BS.

The other complaint is the data reduction ratio. EMC sales droids will tell you “Oh yeah, we see 8:1, 10:1, 12:1+ all the time, we see people getting 100 TB on a single brick system, it’s super duper awesome.” Well, yeah, that might be the case if you’re virtualizing Windows desktops where every friggin one contains the same 30 Gigs of Microsoft bloated crap, and your users are storing bloated file types like PDF’s, Word docs, etc. You may even see pretty sweet reduction ratios if you’re using it for database work where your data contains a lot of compressible information. If you’re using it for anything that involves a lot of images though, you’re going to come nowhere near their claimed reduction ratios. The above picture is an XtremIO deployed in a web hosting environment, so a lot of data consists of image files on the websites in question. As you know, images do not compress well at all, nor are they likely to have a lot of data in common from one to the next, so with a third of the array already used, it’s only at 2.1:1 reduction. Since that screen shot, I’ve gotten it up to 47% utilized and the reduction ratio crept up to 2.2:1, so there is still hope that we may edge closer to our goal of 2.5:1 by the time we get it into the 80% range, but we shall see. These boxes are definitely an Xtrem$$ proposition, so you better need the IOPS if you’re going to deploy them into the kind of environment that sees these poor reduction figures.

I’ll be continuing to update the review as I gain more experience with the array and apply more real world load to it.

Hi there, Dimitris from NetApp here.

Just a piece of advice regarding benchmarking anything that does inline dedupe and compression:

Don’t use tools that do all zeros or all repeating patterns. Bonnie for example produces a hugely compressible workload so you weren’t even hitting the SSDs when doing the test.

More here:

http://recoverymonkey.org/2013/02/25/beware-of-benchmarking-storage-that-does-inline-compression/

Marketing-wise there’s a crazy amount of misinformation, where in fact most of the answers should be “it depends”.

Enjoy your purchase!

Thx

D